ASP.NET Core 7 comes with a built-in rate limiting middleware on the System.Threading.RateLimiting namespace, and it is one of the features I liked the most; I found it worth giving my take on it.

Why does an API need rate limiting?

When building APIs, one of the biggest concerns one can have is ensuring it will be able to be responsive during a period. Unfortunately, our resources are not unlimited, and planning the right strategy is crucial to prevent exhausting them, especially considering that it is a shared environment that affects other users.

Due to the public nature of an API, one could attempt to overwhelm the server with a massive amount of requests in a very short interval, or even use more advanced forms of attack such as DDoS, causing slowness in its responsivity or even availability break.

You cannot allow that; you need a middleware to protect your API. Developers have implemented many of these middlewares or used third-party packages for years. Finally, .NET 7 comes with a built-in solution.

Which type of rate limiter should I use?

Depending on your needs, you may have to choose the proper algorithm, and they can be:

- Fixed window limit

This algorithm limits the maximum of requests to a fixed window time. - Concurrency limit

This algorithm limits the maximum number of concurrent requests at a time. - Token bucket limit

This algorithm limits the number of requests based on a defined amount of allowed requests, or “tokens”. - Sliding window limit

This algorithm works similarly to the fixed window limit but slides the maximum allowed requests through defined segments, working like a rolling tape with tracked pointers. Again, the picture on the link makes it easier to grasp.

The minimal for starting up!

I’ve created a project to play around with the many options we can use to set up the middleware. The project is a simple Minimal API (check my previous post to learn more about it!) with one endpoint that lists a bunch of "GitHubIssues". I decided to keep the Program.cs very lean with minimal configuration while implementing each limiter within integration tests, so it’s more organized and a good sandbox for learning purposes where you can make isolated changes and test results. Let’s understand this step by step.

Program.cs

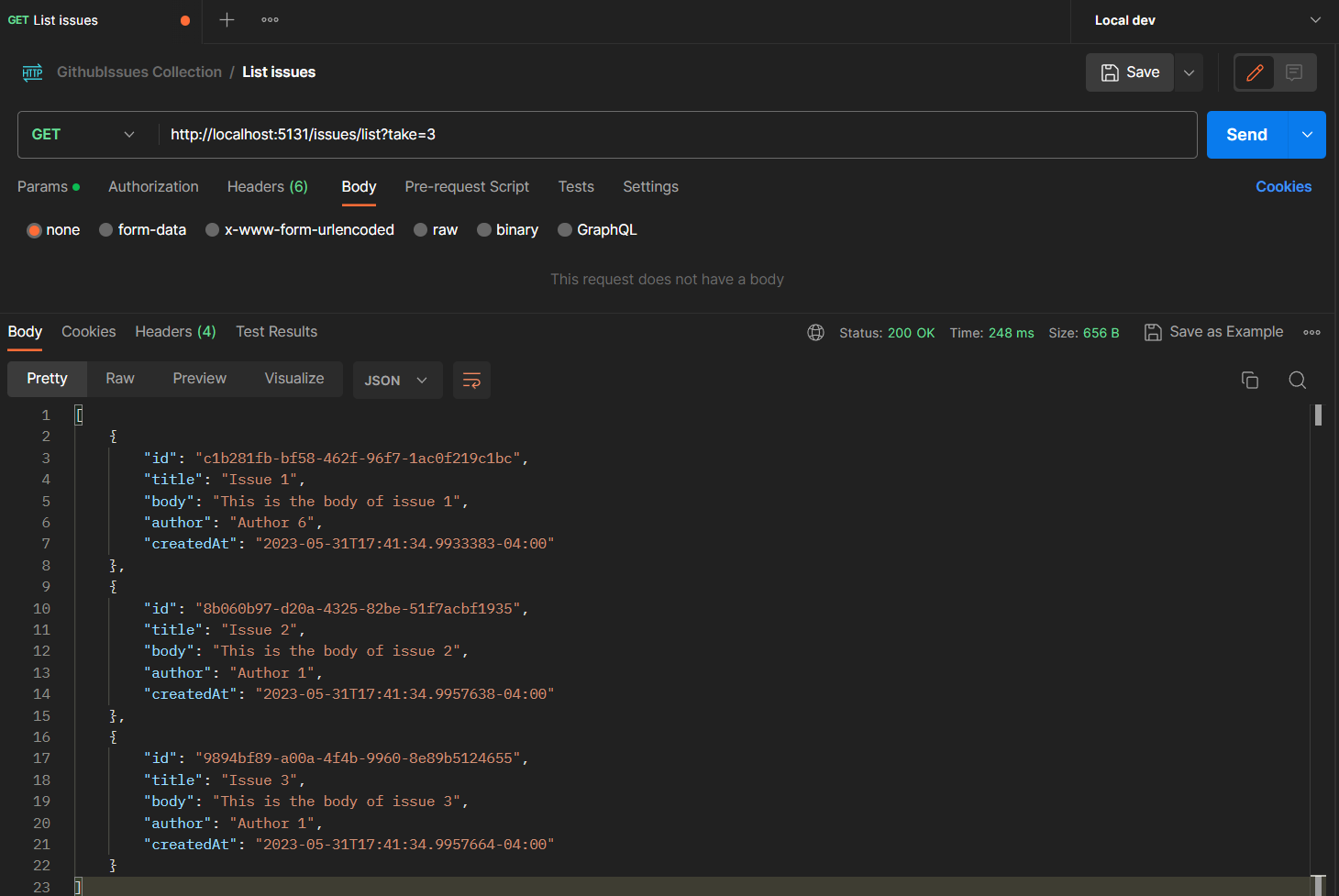

After running the NET.Features.MinimalAPI project and using Postman to request the /list/3 route, this is the result:

Everything is pretty standard so far. Let’s start to make it more interesting 😁

Add and Use RateLimiter

To use the middleware, you must first add it to the IServiceCollection through the AddRateLimiter() extension method. Equally, the WebApplication (aka app) requires the UseRateLimiter()` extension method to be called to use the middleware effectively. No surprises for anyone accustomed to the extension conventions in the .NET API ecosystem.

Notice I also added a policy with the options of AddRateLimiter:

1

2

3

4

services.AddRateLimiter(options =>

{

options.AddPolicy(PolicyNames.AuthenticatedUserPolicy, new AuthenticatedUserPolicy());

});

Don’t worry about it now, I’ll cover it in more detail as we advance, but you need to learn some concepts about limiters before going to this furthermore.

Lastly, the partial class Program at the bottom is a little trick to allow visibility to the test project where we’ll be factoring this pipeline with the tests.

Testing some limits

Before we test each of the available limiter options, it’s crucial to understand how they’re structured in the project since all examples follow the same structure. Therefore, I’m using {LimiterType} keyword as a placeholder to replace with the proper limit type you want to implement for your API.

1

2

3

4

5

6

7

8

9

10

services.AddRateLimiter(options =>

{

options.GlobalLimiter = PartitionedRateLimiter.Create<HttpContext, string>(httpContext =>

RateLimitPartition.Get{LimiterType}Limiter(

partitionKey: {string},

factory: {string}, {LimiterType}Options

)

);

options.OnRejected = [Func<OnRejectedContext, CancellationToken, ValueTask>?]

})

The options.GlobalLimiter is meant to set one global limiter for all requests and expects a PartitionedRateLimiter<HttpContext>. You can create a partition rate limiter using the Create method of the PartitionedRateLimiter static class, combining with the Get{LimiterType} method of the RateLimitPartition static class for the type of limiter you want to build.

The Get method expects a partition key, which is crucial to identify the requester and applying the rate limiting. Keep it consistent. Right after, a factory expects a Func<TKey, {LimiterType}Options>.

The last thing is configuring what happens when the API rejects requests. You can send a response message or log it at will; however, for consistency, it’s essential to set the StatusCode accordingly, and 429 is the right one for the case of TooManyRequests.

*Note: these tests are built as a simple sandbox for quick studying. I avoided using very complex scenarios simulating delayed window intervals to ensure idempotency, but you certainly can change the test cases at your will for desired experiments.

With all set, these are the examples I implemented in the NET.Features.MinimalAPI. Tests project using xUnit and WebApplicationFactory. I also abstracted things like building the WebApplication and the HttpClient in the base class, so there is no need to repeat this for every test. There are also reusable methods for getting the partition key and handling the RateLimiterRejection in there.

FixedWindowLimiter

- ListIssues_WhenFixedWindowLimitOf10RequestsPerMinute_5out15RequestsShouldBeRejected

Tests a scenario where up to10 requestscan be made within a time window of1 minute; when making15 requests in a row,5 requestsshould be rejected.

Queueing exceeding requests rather then rejecting them

This option is available to many other limiter types, and it allows queueing a certain number of requests instead of instantly rejecting them when surpassing the permitted limit. You can also choose the QueueProcessingOrder between OldestFirst or NewestFirst to lease the queued calls.

- ListIssues_WhenQueueingFixedWindowLimitOf10RequestsPer10Sec_0out15RequestsShouldBeRejected

Tests the a similar scenario as the previous test, but with setting aQueueLimit = 5, the output will be way different since exceeding requests will be queued instead of instantly rejected.

Note: Beware that the time window you use will impact the overall response, and you can check it by observing the test runner. It’s essential to consider it when setting both the time window and the queue limit to keeping your API responsive to the clients.

Chaining limiters

One way to make the overall configuration a bit more granular is chaining limiters using different options with PartitionedRateLimiter.CreateChained.

- ListIssues_WhenChainedFixedWindowLimitOf60RequestsPerMinute_10out20RequestsShouldBeRejected

Tests a scenario whereup to 10 requestscan be made within aninitial time window of 10 seconds, but also allowing atotal of 60 requests per minute; consequently, making20 requestsat once will reject10 requests.

ConcurrencyLimiter

- ListIssues_WhenConcurrencyLimitOf2Requests_8out10RequestsShouldBeRejected

Tests a scenario where it’slimiting concurrency to 2, which meansonly 2 requestsare allowed to proceed at a time. When making10 parallel requestswith Task.WhenAll,8 should be rejected.

TokenBucketLimiter

- ListIssues_WhenTokenBucketLimitOf20Requests_5out25RequestsShouldBeRejected

Tests a scenario where a “bucket” filled with20 tokens(meaning possible requests) receives a range of25 requests, depleting it completely, hence getting5 requests rejecteduntil it’s filled again. The limiter is configured to replenish the bucket with10 tokens each every minute.

SlidingWindowLimiter

- ListIssues_WhenSlidingWindowLimitOf10Requests_10out20RequestsShouldBeRejected

Tests a scenario where within an window time of30 seconds, split into3 segments of 10 secondsin which only10 requestsare allowed per segment, a range of20 requestsare made in a row, hence resulting in10 requests rejected.

Custom policies

Back to the Program.cs at the beginning, I told you not to worry about the options.AddPolicy statement until I showed all the basics about rate limiting; now it’s time to discuss that. Policies can be created to enforce a rate-limiting to all or specific endpoints of your API. They must implement the IRateLimiterPolicy<TPartitionKey> interface, which defines a GetPartition(HttpContext httpContext) method returning a RateLimitPartition we have seen many times in this article.

For this project, I created the AuthenticatedUserPolicy to apply different rate limits depending on if the requester is authenticated. With this policy, authenticated users can make up to 500 requests per minute, while non-authenticated users are restricted to 50 requests per minute.

It’s worth mentioning that I applied this policy to all routes with .RequireRateLimiting(); extension method, but you could make it more granular with different policies (or don’t require any) for each endpoint.

Let’s put it up to test:

ListIssues_WhenUnderAuthenticatedUserPolicy_0out50RequestsShouldBeRejected

Tests a scenario where authenticated users make50 requests within a minute, henceno requests are rejected.ListIssues_WhenUnderAuthenticatedUserPolicy_10out50RequestsShouldBeRejected

Tests a scenario where authenticated users make the same50 requests within a minute, hence10 requests are rejected.

Running the NET.Features.MinimalAPI project, I’ll use Postman to show the policy in action. I know there are specific tools for this kind of test, but I bet you have Postman installed, so there’s an easy way to make consecutive requests to test our policy by setting a runner to create multiple iterations in the collection:

Notice that the server starts to reject requests after the 40th non-authorized request. The policy works fine!

Applying policies on Controllers

For larger applications where the API is based on actual controllers, you could apply the same policy using EnableRateLimitingAttribute, like this:

1

2

3

4

5

[EnableRateLimiting(PolicyNames.AuthenticatedUserPolicy)]

public class GibhubIssuesController

{

public async Task<IActionResult> GetIssues() { ...}

}

Or even something more fine-grained:

1

2

3

4

5

6

7

8

9

10

11

public class GibhubIssuesController

{

[DisableRateLimiting]

public async Task<IActionResult> GetApiInfo() { ...}

[EnableRateLimiting(PolicyNames.AuthenticatedUserPolicy)]

public async Task<IActionResult> GetIssues() { ...}

[EnableRateLimiting(PolicyNames.AnotherPolicy)]

public async Task<IActionResult> GetIssues() { ...}

}

Final thoughts

I hope you’ve enjoyed this article and learned something through the test cases showing the built-in rate limiters of .NET 7 capabilities. But don’t limit yourself (🤣) to those! Please clone the code and play around with adding more complex scenarios. Never forget how important it is to make your APIs more secure and available. Happy coding!

Links worth checking

- Announcing Rate Limiting for .NET - Brennan Conroy

- Sliding Window Rate Limiter

- Token Bucket

- Sliding Window