In the previous post, I talked about Domain events and Event Sourcing. Now let’s look at persisting, projecting, and reading events using MartenDB.

- Part 1 - Project’s overview and Domain-driven Design

- Part 2 - Strategic and Tactical Design

- Part 3 - Domain events and Event Sourcing

- Part 4 - Implementing Event Sourcing with MartenDB

- Part 5 - Wrapping up infrastructure (Docker, API gateway, IdentityServer, Kafka)

- Part 6 - Angular SPA interacting with API

What is MartenDB?

Marten is a .NET library that allows developers to use the Postgresql database as both a document database and a fully-featured event store – with the document features serving as the out-of-the-box mechanism for projected “read side” views of your events.

As their website describes, it was built to replace RavenDb inside a very large web application that was suffering stability and performance issues.

While studying event sourcing, I looked for an out-of-the-box implementation so I could revamp this project from a relational database to event-sourced more easily and quickly. The library is aligned with .NET Core Support Lifecycle to determine platform compatibility, and they seem to be constantly improving its. You can check their project on GitHub and their nice documentation at MartenDb.io. I followed their documentation to use what I needed for this project.

Postgres as document database

PostgreSQL is a powerful, open source object-relational database system with over 30 years of active development that has earned it a strong reputation for reliability, feature robustness, and performance.

You’ve seen it before: we need to persist serialized events into an event store, and the perfect place for it is using a document-oriented rather than a relational database. Using Postgres is a viable option, especially considering its support for JSON. It was a perfect match for MartenDB.

Writing and reading events

To ease writing and reading events with Marten, I opted for a pragmatic abstraction with the IEventStoreRepository you can find in the EcommerceDDD.Core project, while its implementation was done in EcommerceDDD.Core.Infrastructure/Marten with MartenRepository.

I don’t advocate for generic repositories when designing them for aggregate roots, but this was a straightforward solution for persisting domain events. Still, there’s a constraint ensuring that it works only for an aggregate root, which complies with the base class containing a queue of uncommitted IDomainEvents, ready to be stored.

Last but not least, the repository implementation uses an abstraction called IDocumentStore, allowing us to use different flavors of what they call a session. Notice how it resembles a Unit of Work / DBContext when using Entity Framework.

AppendEventsAsync(TA aggregate)

At first, this method opens a store session, and the underlying code does the following procedure:

- Gets all uncommited events from the aggregate.

- Calculate the aggregate next version for return.

- Clear all uncommitted events from the aggregate.

- Appends the uncommited events.

- SaveChangesAsync to commit the transaction

At the end you can expect the database containing a snapshot of the system state.

FetchStreamAsync(Guid id, int? version)

The method brings the aggregate from the event stream by Id. There’s an optional version argument in case you need to load a specific version.

One interesting thing you can do is add breakpoints to all Apply methods of any aggregate, like Quote, for example, and then perform operations like adding and removing products or changing the quantity. All these behaviors are commands that generate events and persist stored in the event stream. Lastly, when calling the FetchStreamAsync afterward for this aggregate again, you can track the Apply for each event sequence and check it rehydrating the aggregate.

The Event Store

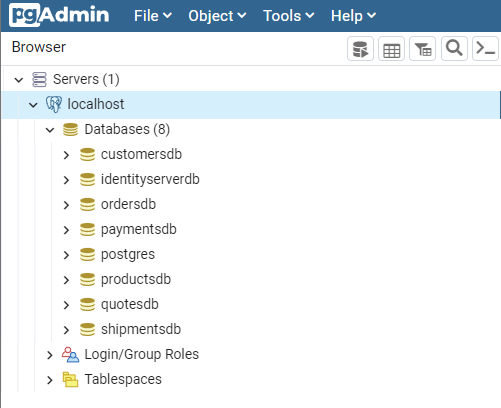

Assuming you ran the app, it should have created a couple of databases for each microservice. I’m using pgAdmin as a GUI for managing Postgresdb, and it’s already set as a docker container for you in this project:

Still about Quotes for an order, let’s see how the data is handled under the hood. I mentioned before that every database will perform read and write operations separately using different “tables”. When querying the quotes_write event store, we can see the sequence of every event added in chronological order:

1

2

SELECT * FROM quotes_write.mt_events

ORDER BY seq_id ASC

Notice the columns stream_id, which carries the Aggregate id itself, the version, the data with the serialized event, the type name, and the dotnet_type describing the namespace and class name of the event. Cool, eh?

Projections

You’ve seen we need to load all the sequence of events to rehydrate the aggregate to get the current state. The example above shows at least six, but they are unlimited. Imagine a system in production having to handle thousands or hundreds of thousands of events per aggregate per user, and every time they query a particular object, you have to traverse all this path to recreate it and reach the latest state, which is practically impossible to fly.

To solve this problem, you need to project only the latest states of the aggregate somewhere, more specifically, to the read database or table. That’s another feature I found handy with MartenDB: inline projections. It’s easy to set the projection of an event immediately as it’s stored. When the UI needs the API to query any information, it will read straight from the data projected in the read db.

The configuration is as simple as one line of code:

Notice that the first projection QuoteDetailsProjection inherits from SingleStreamAggregation for aggregating events by stream using pattern matching, where QuoteDetails is the actually projected object into the database table mt_doc_quotedetails.

1

2

SELECT * FROM quotes_read.mt_doc_quotedetails

ORDER BY id ASC

The second projection, QuoteEventHistory is meant to be simpler, inheriting from the EventProjection base class to project each one of the possible events this aggregate contains, but transforming it into an object of IEventHistory interface I created to standardize the history of all aggregates and to ease displaying it through the stored-events-viewer Angular component we will check in the last chapter of the series.

One of the beauties of using Event Sourcing is that we can shape the projected data as we need it and in many different forms for different visualizations, onto different places, without changing the original and immutable source of truth: the event store. Even if you destroy the read-only store containing the projections completely, you can reproject it to the exact last state over again, like rolling frames of a movie.

Final thoughts

You learned how to store events using MartenDb and Postgres, then project their last state into a read-only database that we can expose to the external world. In the next chapter of the series, I’ll wrap up everything I have shown since it started. See you there!

Links worth checking

- Aggregates, Events, Repositories with Marten

- EventSourcing.NetCore

- CQRS pattern

- Event Streaming is not Event Sourcing! by Oskar Dudycz

- Optimistic concurrency for pessimistic times

- Domain events

- Ubiquitous language

- Bounded context