In the previous post, I talked about persisting domain events into the event store, projecting and reading them, all using MartenDb. Now it’s time to wrap everything I covered so far, add some more infrastructural missing pieces, and get all the backend finished.

- Part 1 - Project’s overview

- Part 2 - Strategic and Tactical Design

- Part 3 - Domain events and Event Sourcing

- Part 4 - Implementing Event Sourcing with MartenDB

- Part 5 - Wrapping up infrastructure (Docker, API gateway, IdentityServer, Kafka)

- Part 6 - Angular SPA and API consumption

Docker containers

I couldn’t wrap up this series without highlighting the importance of providing an out-of-the-box developer experience. All you need to run the project is to have Docker installed — no extra setup, no dependency hell.

If you’re new to Docker, it’s the most widely used open-source platform for building, deploying, and managing containerized applications. In this project, I used Docker Compose to orchestrate containers for each microservice. I also leveraged public Docker images for things like PostgreSQL, Kafka and related services.

Once everything is defined in the docker-compose.yml file, spinning up the full environment is as simple as running:

1

$ docker-compose up

Ocelot - API Gateway

Given our microservices architecture, we have multiple APIs — typically one per service. From the perspective of the frontend (SPA), calling each service individually is not only impractical but also undesirable. Each API lives on a separate port and inside a different Docker container.

We don’t want the SPA to know anything about internal infrastructure, such as which microservice handles what or where it’s running. Instead, we use an API Gateway to abstract this complexity.

For this, I chose Ocelot, a lightweight API Gateway for .NET. It allows us to centralize all routing behind a single entry point: http://localhost:5000. That’s the only address the SPA needs to be aware of.

The routes are defined in ocelot.json, where I used the Docker service name as the downstream host. Here’s the current structure:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

├── Crosscutting

│ ├── EcommerceDDD.ApiGateway

│ │ └── Ocelot

│ │ └── ocelot.json

│ │ └── ocelot.accounts.json

│ │ └── ocelot.customers.json

│ │ └── ocelot.global.json

│ │ └── ocelot.inventory.json

│ │ └── ocelot.orders.json

│ │ └── oocelot.payments.json

│ │ └── ocelot.products.json

│ │ └── ocelot.quotes.json

│ │ └── ocelot.shipments.json

│ │ └── ocelot.signalr.json

ocelot.customers.json

⚠️ The ocelot.json is a bundle of all these individual configurations underneath it. Organizing routes through smaller files is a good way to keep it all atomic and organized. The automatic merge is done in the Program.cs like this:

1

2

3

4

5

6

7

8

9

10

11

builder.Configuration

.SetBasePath(Directory.GetCurrentDirectory())

.AddJsonFile("Ocelot/ocelot.json", optional: false, reloadOnChange: true)

.AddOcelot(

folder: "Ocelot",

env: builder.Environment,

mergeTo: MergeOcelotJson.ToFile,

primaryConfigFile: "Ocelot/ocelot.json",

reloadOnChange: true

)

.AddEnvironmentVariables();

Refer to Ocelot’s official documentation for more options and advanced configurations.

EcommerceDDD.IdentityServer

When registering a new customer, the system requires an email and password. These are authentication concerns — not part of the customer’s domain model, and are handled in a separate project: Crosscutting/EcommerceDDD.IdentityServer.

ASP.NET Core Identity

ASP.NET Core Identity: It is an API that supports user interface (UI) login functionality. Manages

users,passwords,profile data,roles,claims,tokens,email confirmation, and more.

I configured it using the same PostgreSQL instance used elsewhere in the project. Migrations are generated using IdentityApplicationDbContext:

1

dotnet ef migrations add InitialMigration -c IdentityApplicationDbContext

You can find these migrations in the Database/Migrations folder.

IdentityServer

IdentityServer is an OpenID Connect and OAuth 2.0 framework for ASP.NET Core.

IdentityServer is handy for authentication and can be easily integrated with ASP.NET Core Identity. Check out the Program.cs below and notice how I made it support the application using its .AddAspNetIdentity extension method:

Two more migrations were added to complete the persistence ready for IdentityServer:

1

2

dotnet ef migrations add InitialIdentityServerConfigurationDbMigration -c ConfigurationDbContext -o Migrations/IdentityServer/ConfigurationDb

dotnet ef migrations add InitialIdentityServerPersistedGrantDbMigration -c PersistedGrantDbContext -o Migrations/IdentityServer/PersistedGrantDb

To bootstrap initial data such as clients, scopes, and resources, I created a DataSeeder.cs file.

With both migrations applied when the project runs, we should have this complete database structure:

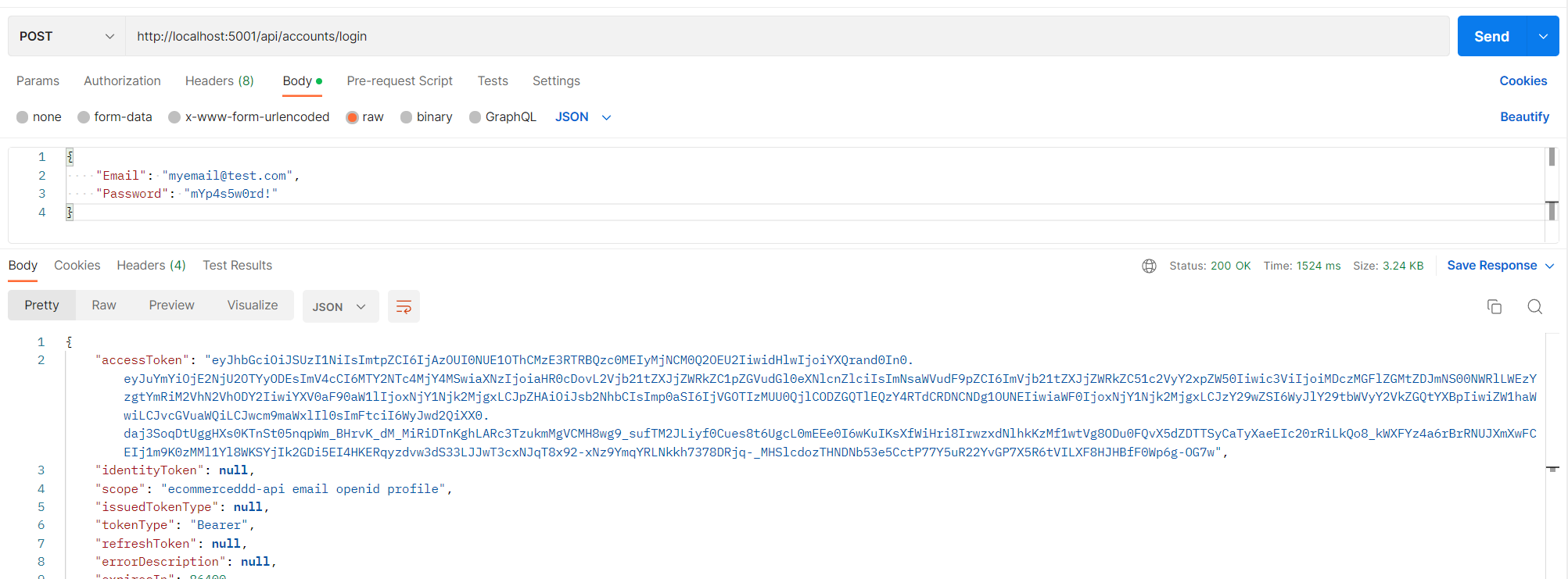

Issuing tokens

Once everything is running, the ecommerceddd-identityserver container container is available at http://localhost:5001. It exposes an AccountsController used to create users and request tokens.

The controller uses an ITokenRequester service that wraps the logic of requesting user tokens and application tokens. It simplifies both authentication and service-to-service communication.

ITokenRequest relies on TokenIssuerSettings.cs, a configuration record matching the section in appsettings.json within each microservice, and from there, it can gather important information for issuing tokens:

User Token

1

2

3

4

5

6

"TokenIssuerSettings": {

"Authority": "http://ecommerceddd-identityserver",

"ClientId": "ecommerceddd.user_client",

"ClientSecret": "secret234554^&%&^%&^f2%%%",

"Scope": "openid email read write delete"

}

Application Token

1

2

3

4

5

6

"TokenIssuerSettings": {

"Authority": "http://ecommerceddd-identityserver",

"ClientId": "ecommerceddd.application_client",

"ClientSecret": "secret33587^&%&^%&^f3%%%",

"Scope": "ecommerceddd-api.scope read write delete"

}

User tokens are generated on behalf of a specific user during the authentication process. They represent the identity of the user and contain information such as user ID, claims, and other data, with a shorter lifespan. In comparison, Application tokens are used to authenticate and authorize the application itself rather than a specific user, but machine-to-machine communication has a longer lifespan since it does not depend on individual user sessions.

Scopes, Roles, and Policies

The scopes defined in the token settings are more than just metadata — they directly control what operations the token bearer is authorized to perform. Each API endpoint is protected using [Authorize] attributes that enforce access rules via roles and policies.

For example:

1

[Authorize(Roles = Roles.Customer, Policy = Policies.CanRead)]

This ensures that only authenticated users with the Customer role and CanRead policy can access the endpoint. I also defined CanWrite and CanDelete, and applied where it makes sense.

For machine-to-machine communication, application tokens are restricted similarly:

1

[Authorize(Roles = Roles.M2MAccess)]

By combining scopes, roles, and policies, you can create a fine-grained security model that controls access both at the user level and the system level. These policies are typically defined using ASP.NET Core’s AddAuthorization setup in the Program.cs of each microservice.

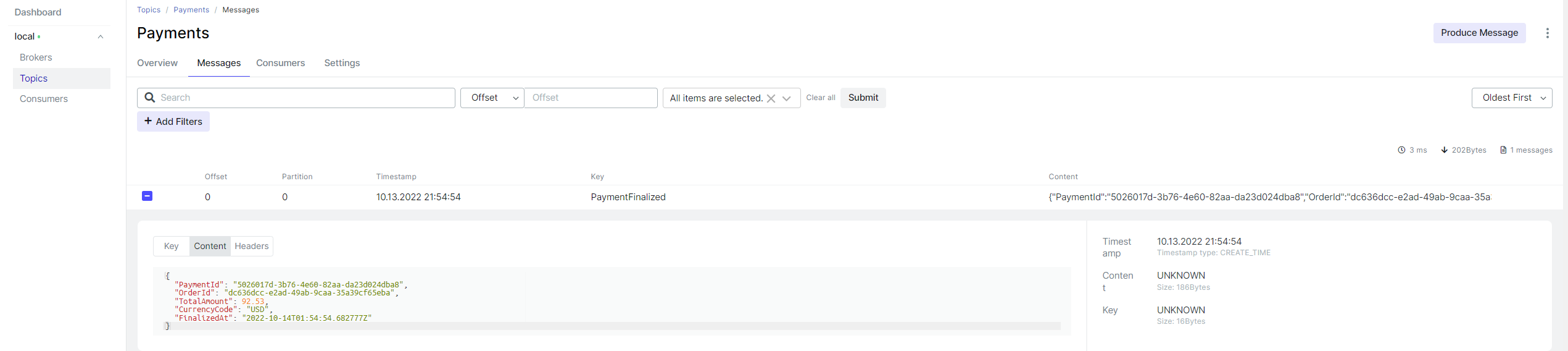

Kafka topics

Apache Kafka is an open-source distributed event streaming platform used by thousands of companies for high-performance data pipelines, streaming analytics, data integration, and mission-critical applications.

One last but essential aspect of the infrastructure is allowing different bounded contexts to communicate using a message broker. I mentioned integration events in the last chapters. They implement the IIntegrationEvent interface, which inherits from EcommerceDDD.Core.Domain.INotification.

I’m using Kafka as a message broker here, but there are good other options, such as RabbitMQ, Memphis, Azure Service Bus and others.

The idea is simple. Some microservices are producing integration events, while others are consuming them. In EcommerceDDD.Core.Infrastructure/Kafka, you will find a KafkaConsumer class from Program.cs in the EcommerceDDD.OrderProcessing microservice, which is subscribed to a list of topics defined in the appsettings.json.

When an event reaches the topic, the consumer receives it from the stream and deserializes into a corresponding integration event in which the OrderSaga.cs is configured to handle

1

2

3

4

5

public class OrderSaga :

IEventHandler<OrderPlaced>,

IEventHandler<OrderProcessed>,

IEventHandler<PaymentFinalized>,

IEventHandler<ShipmentFinalized>

This approach was inspired by repo I mentioned before. Check it out!

Now back to Kafka; when using kafka-ui, you can easily see the existing topics and check their messages.

How to ensure transactional consistency across microservices?

When using events to communicate across services, consistency becomes crucial. If a message isn’t published, the entire workflow might break.

To handle this, I implemented the Outbox Pattern in MartenRepository.cs:

AppendToOutbox(INotification @event)Unlike domain events, which are handled by AppendEventsAsync, integration events are stored in a separate outbox table — but still within the same transaction.

Initially, I had a KafkaConsumer background service that was constantly polling messages put into the outbox table for each microservice, but I changed everything to use CDC (Change Data Capture) with Debezium, which watches the table and automatically publishes new events to Kafka.

I wrote an entire post about Consistent message delivery with Transactional Outbox Pattern with details for using this technology. Remember to check it out!

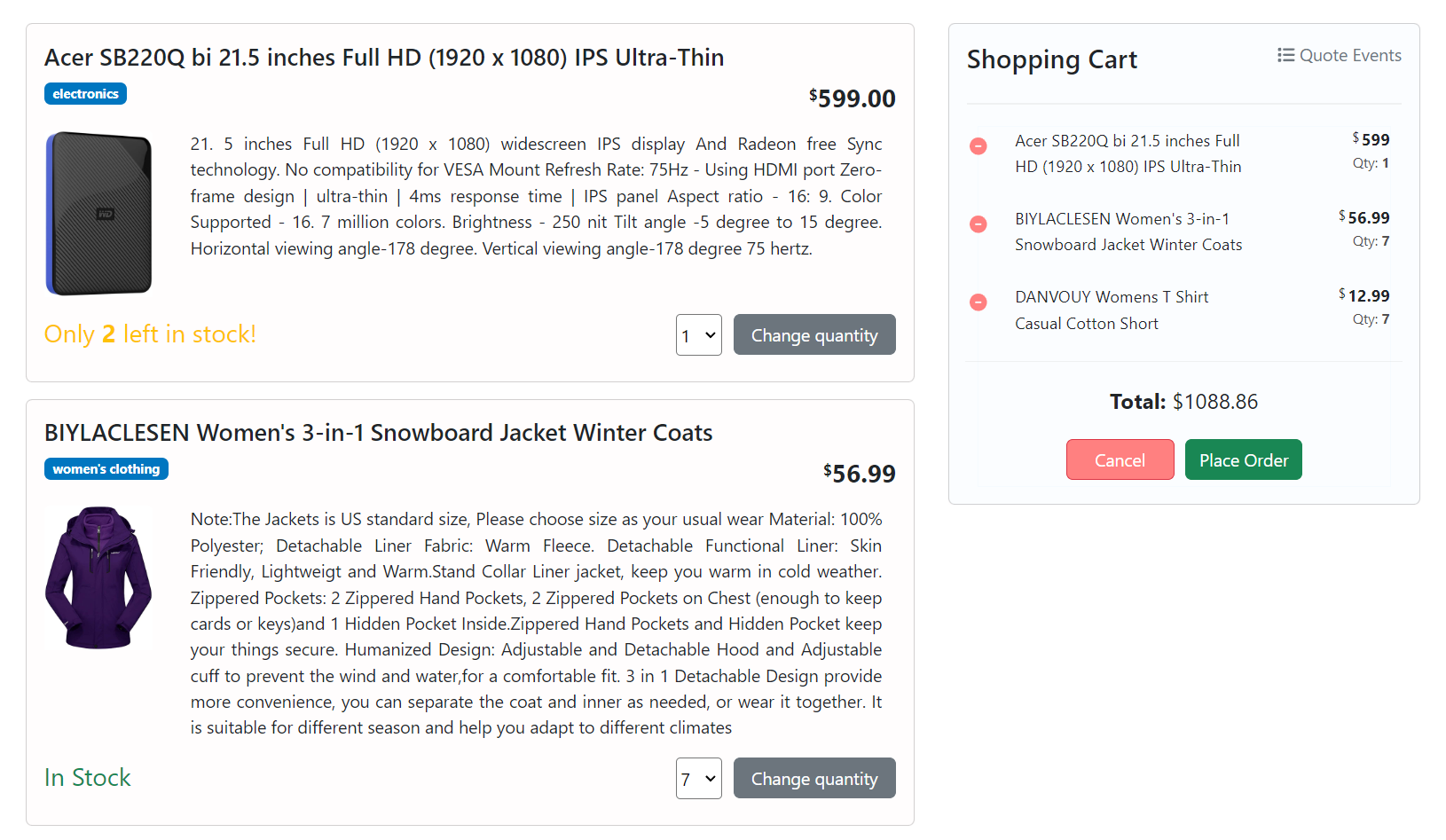

SAGA - Placing order into the chaos

At this point, we can place orders; orders will be processed asynchronously in the server; integration events will fan out other internal commands. To coordinate all this logically sequentially, we need e need SAGA, a design pattern to manage data consistency in distributed transaction scenarios like this. To illustrate the flow, check below Events in orange and Commands in blue sticky notes:

The successful ordering workflow is handled in the OrderSaga.cs. However, there are failing cases you have to be prepared to handle and compensate for the flow somehow. For example, what if you purchase more products than are available in stock? Or what if you exceed the credit limit and can’t complete the payment? I implemented compensation events in each microservice and placed the handling for these cases into the OrderSagaCompensation.cs to cancel the order.

For testing the compensation flow, try to either spend more than your credit limit or get the maximum amount of many products like the below:

Each compensation event leads to a cancellation command, including a reason and reference. In real-world scenarios, you’d likely implement a more nuanced approach (e.g., backorders), but this implementation demonstrates the concept well.

Final thoughts

This concludes the backend portion of the solution.

Remember: microservices are not a silver bullet. Monoliths are still viable and often better for small teams or early-stage products. Microservices introduce complexity that only pays off when your architecture requires the scalability, autonomy, and resilience they provide. With all that said, we’re now ready for the next and final chapter, where I’ll focus exclusively on the SPA that makes all this shine. See you there!

Links worth checking

- Docker

- Docker Hub

- IdentityServer

- Ocelot

- ASP.NET Core Identity

- Implementing event-based communication between microservices

- Creating And Validating JWT Tokens In C# .NET

- IdentityServer Authentication with ASP.NET Identity for User Management

- Domain events

- Ubiquitous language

- Bounded context

- SAGA